7 CARTA’S Imperatives – Continuous Adaptive Risk and Trust Assessment by Gartner

In 2018, Neil MacDonald of Gartner made the following assumptions:

1. by 2020. 25% of business initiatives will adopt a CARTA approach – up from less than 5% in 2017.

2. By 2020, cognitive security analytics will perform 15% to 20% of threat response functions – currently performed by humans

3. by 2020. 60% of companies will be an integral part of larger digital business ecosystems.

Of course, 2020 was unpredictable. At this point I am still waiting for the data however we can successfully assume that due to forced digitization – the value from point three could reach 80%.

Actually, since the invention of secret information and the discovery of valuable resources, people have been striving to protect them effectively. Assessing the risk of how access is granted in the physical world – its scope and the number of people authorized – is already difficult enough and ranks high among business priorities. In the digital world, the level of risk to your assets changes with the speed of setting up a new email. Without a clear picture of risk in their organizations, security administrators protect data the only way they can – with restrictive measures under the principle of least privilege. This results in the system saying “no” when it should thoughtfully say “maybe” or “yes, but with restrictions.” This causes frustrated users to try to circumvent security, which in turn triggers more alerts and takes up security analysts’ time…. and the circle closes.

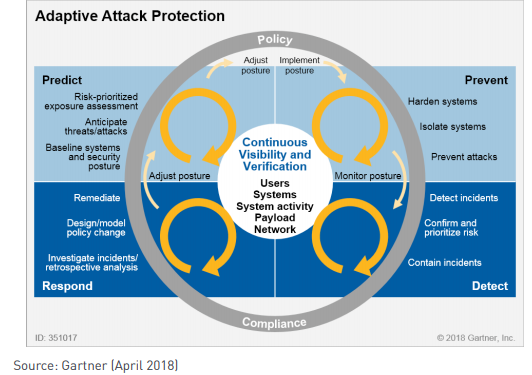

Gartner’s answer is CARTA – Continuous Adaptive Risk and Trust Assessment – an approach to assessing user risk and trust as a process. It assumes continuous monitoring and analysis of the system as a starting point, enabling rapid detection of behavioral anomalies and responding to them – in other words, catching and responding to risky activities. Fundamental to this approach is the assumption that any malicious behavior is preceded by symptoms (which gradually increase a user’s risk) – if you recognize them well, you can stop the “risky” user before it’s too late.

The entire process consists of 4 areas – in which key activities are highlighted – this is illustrated in the graphic above. You need to look at – how, when and why people interact with sensitive data, correlating behavior with the context of user actions and only on that basis assessing risk.

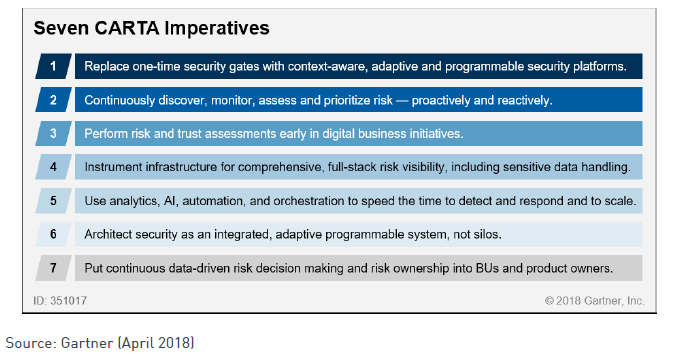

CARTA is based on 7 principles:

Imperative #1: Replace statically validating security gateways with security platforms that take into account context and variability in risk levels

Currently, much of the existing cyber security infrastructure is based on one-off decisions using predefined lists of “bad” and “good” – IP addresses, accounts, operations. This approach is flawed for two main reasons:

- There is no pre-existing signature for a zero-day or targeted APT attack.

- Once the bad guys (and insider threats) have gained authenticated access their “out of the gate” activity is invisible. Once access is granted, administrators have limited visibility into the activities of users, systems and executable code.

To compensate for this, we subject users to longer and longer scans for malware and an increasingly stringent password regime. In a futile effort to answer the question – to assign access or not? Our initial decision cannot be perfect – because we are constantly evaluating the consequences of that initial decision. That’s why CARTA is moving away from one-time authorization decisions toward continuously evaluated decisions based on the risk level of the user account.

Imperative #2: Detect, monitor, assess and prioritize risk – proactively and reactively

Traditional security infrastructure treats risk and trust as binary and fixed and often derives trust from system ownership. This is not sufficient to support a business where it is necessary to provide access to systems and data to partners and customers anywhere, anytime on any device, often from infrastructure that we do not own or control.

Risk and trust rise and fall based on the context and behaviors of individuals during sessions (and relative to baselines established between sessions). Risk and trust models can be developed based on observed patterns. If user risk or behavior becomes too high and outweighs trust, we can take steps to reduce risk or increase subject trust and requested actions.

- Increasing trust: e.g., requesting stronger authentication to increase our confidence that the user is indeed who they say they are. Alternatively, the user may be restricted to downloading files only to a trusted device.

- Reduce risk: for example, wrap content with digital rights management during download or, alternatively, downloads can be blocked altogether.

Imperative #3: Conduct risk and trust assessments early in digital initiatives

Earlier imperatives focused on operational security and risk protection during process execution. However, that process is being enhanced with more and more infrastructure – physical and logical. This expansion is being done through, among other things:

- Development of applications, services and products.

- Purchase of IT systems.

- Taking advantage of new SaaS, PaaS or IaaS services.

- Downloading new applications and services for local installation.

- Selecting and deploying new operational technology (OT) and Internet of Things (IoT) devices.

- Opening up internal systems and data through API application programming interfaces.

In all of these areas, information security suffers from the same problems we’ve described in previous sections – an over-reliance on one-off, intensive GOOD/BAD assessments. We cannot evaluate a system without considering the entire environment – other systems, processes, and users. As the environment changes – the risk level of the system changes. In the table below, we present the fundamental difference between an ongoing, proactive assessment of risk level – and a reactive approach:

Table: Proactive and Reactive Risk Detection and Assessment

| Safety Area | Proactive Approach | Reactive Approach |

| Defending against cyber attacks | Where and how will attackers target? How real is the threat? How should I adjust my security posture to reduce exposure to the threat? What can I do proactively to increase the attack surface? How can I prioritize risk in my recovery efforts? | Where am I being breached? Are these attacks real and what incidents pose the greatest risk? |

| Identity and access management (IAM) | Where and how will users need access? (For example, to new SaaS applications, BU applications, etc.) How widespread is this access? What risks does it represent? How can I mitigate the risk by applying controls (privileged access management, multi-factor authentication)? How critical and sensitive are these resources? How can I ensure sufficient access to the resource in a timely manner? What assurance do I have that a user is who they say they are before I grant access? | Where do user access/use patterns present enough risk that I need to respond? How confident am I that the incident is not a false positive? How valuable is this user & the system/data they are accessing? What is the current threat level?Is there a pattern of suspicious activity among multiple users / domains?Where is user access overly allowed? |

| Data security | Where is sensitive data created in my enterprise? Where is sensitive data created and stored outside my enterprise? What is considered “valuable” and why? How is sensitive/valuable data protected?Are risks being managed? | Where does improper handling of sensitive data occur? How sensitive/valuable is the data? Does it pose enough risk that I need to respond? |

| Business Continuity Management (BCM) and Cyberresilience | What areas/business processes have the greatest impact on revenue in the event of a loss of service? Which systems support this? | In the event of a suspected failure: Is the failure confirmed? What business processes and revenues are directly impacted? Which systems/processes need to be restored first? |

Imperative #4: A security infrastructure that provides risk full-stack visibility, including the processing of sensitive data

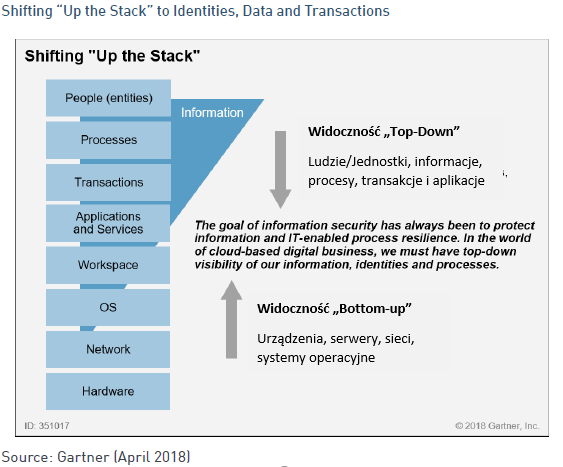

The goal should be visibility into behavior on the network and on end devices whenever possible. At the same time, traditional security infrastructure has been overly dependent on this. Why? Because this approach won’t work when we no longer own the network, server, OS or application – as we do in the cloud model. So we need to adapt to the visibility and protection of information, applications and data as we have protected networks and endpoints in the past. This will be especially important for protecting users and data in cloud-based services. Endpoint device and network visibility is still important (if we have it), but it needs to be augmented with top-down visibility, as shown in Figure 5. We will monitor everything where possible – all activities, interactions, transactions and behaviors that provide situational awareness of users, devices and their behaviors.

To summarize item #4 : Security operations must assess and monitor identity/account, data and process risks.

Imperative #5: Use analytics, AI, automation and orchestration tools to accelerate detection and response times and scale limited resources.

To identify meaningful risk indicators, analyze the data using multiple analytical methods, including:

- Traditional signatures.

- Behavioral signatures.

- Correlation.

- Deception techniques.

- Pattern matching.

- Baseline and anomaly detection by comparing to historical behavioral patterns and peers (groups or organizations).

- Entity linkage analysis.

- Similarity analysis.

- Neural networks.

- Machine learning (supervised and unsupervised).

- Deep learning.

We must acknowledge that risk identification in digital business will require a set of layered, mathematical and analytical approaches to an ever-growing data set. Techniques such as:

- Signatures and pattern matching require less computing power and time.

- Machine learning will require more time and will require the use of historical data sets.

Over-reliance on one analytical or mathematical technique will put organizations at risk. The best security vendors and platforms will use multiple layers of analytical techniques to better detect and uncover significant risks to focus our limited resources on real and important threats. The effect is similar to a funnel that takes billions of incidents and uses analytics and context to turn them into a few high-confidence, high-value, high-risk incidents that the security team must focus on every day.

The “defense in depth” security principle must include “analytics in depth” analytical methods.

Automation, orchestration and artificial intelligence (AI) will accelerate our response time. We must use automation, orchestration and AI to act as a resource multiplier for your team and improve the efficiency and effectiveness of our limited security operations resources. We don’t have time to investigate every incident.

Big data analytics and machine learning will allow our limited staff to focus on answering three essential questions:

- Is the detected risk real?

- What is the threat level associated with that risk?

- Are the resources subject to this risk significant?

Imperative #6: Design IT security as an integrated, adaptable system – rather than “security silos” that point protect sensitive areas.

Traditional security infrastructure is about protecting individual areas – for example, IAM, end devices, networks or applications. There are many problems with this approach:

- Silenced controls create too many vendors and consoles, adding complexity and increasing the risk of misconfiguration.

- Each silo doesn’t have enough context for high-confidence detection, creating too many alerts. Many of these are false alerts or represent low risk.

- Events from silenced controls are often passed to the SIEM, which may or may not make sense of additional events (garbage in, garbage out). This creates another source of alerts, many of which are false positives or low risk.

In reality, advanced attacks cover our internal security areas and effective detection involves correlating signals from several areas. For example, an attacker launches an email-based attack:

1. the email contains a URL leading to malicious content.

2. the content is loaded onto an endpoint device, where a vulnerability is attacked to launch a keystroke monitoring program to obtain access credentials.

3. the access credentials are then used to spread to other systems and ultimately to access and exfiltrate sensitive data.

As with the blind men and the elephant – each silo contains a piece of the story, but none has the full picture. The best security protection will act as a system that combines visibility into these silos – or eliminates them altogether – to improve detection. Once an incident is detected, the offending entity (user account, file, URL, executable, etc.) can be immediately forwarded and blocked across all channels – endpoint device, server, email and network – to prevent propagation. Security as a system provides a holistic approach to visibility into the IT ecosystem to which your assets are connected (rather than which you control – which was the previous paradigm).

Security platforms must:

- Be software – software-based productions, fully programmable and accessible via APIs.

- Have the ability to specify context using standards such as STIX, TAXII or JSON and support industry-specific information sharing and analysis centers such as FS-ISAC, OpenDXL or pxGrid.

- Enable continuous assessment of the level of risk, rather than relying on high-risk one-time permit/deny access assessments.

- Have the ability to store and process increasing amounts of data, as more and more visibility (and thus data) is needed to make efficient and effective security decisions. Charges for increased storage or computing capabilities inhibit these decisions.

Imperative #7: Implement data-driven decision making and risk accountability at the business unit and product owner level

Our risk management and compliance processes are subject to the same constraints as the one-time permit/deny access assessments I discussed earlier. Today, we too often use rigid and lengthy procedures and checklists to evaluate the effectiveness of our security program. To keep up with the evolving threat landscape, we should move away from static checklists and implement a risk level assessment process – an ongoing process that takes into account changes in lines of behavior over time. This mindset is captured in an approach that Gartner calls integrated risk management ¬- Integrated Risk Management (a set of practices and processes supported by a risk-aware culture and enabling technologies. It improves decision making and performance through an integrated view of how well an organization manages its unique set of risks). IRM involves prioritizing a proactive approach to risk detection.

Table: Proactive vs Reactive approach to risk detection

| Risk Proactive risk detection | Reactive risk detection | |

| Management | What new digital business initiatives are planned?What are existing digital initiatives with a low threshold of reputational risk? What new regulatory requirements am I subject to and what is the internal political appetite to meet them?What are the associated cyber risks? How serious are these cyber threats? How valuable is the new initiative (revenues, costs, liabilities)? What new business opportunities are available if I accept more risk? What commitments to shareholders or other stakeholders should I trace back to digital business risk decisions? | What is my overall risk position across all BUs, partners, projects and infrastructure? Where are the high risk areas? Where are risk mitigation procedures not being followed and what is the impact? What assets/services are at risk and what is their value? What safeguards are missing and what is the risk? |

Remember that embracing the principle of owner responsibility is a key prerequisite for introducing CARTA into risk management. The ultimate responsibility for protecting an enterprise’s information assets and, by extension, its business processes and performance, rests with the business owners of the information assets. Resource owners must have the authority to make the data-driven and risk-based decisions required to discharge their responsibility. Expecting the IT security team to make these decisions with an acceptable level of trust and risk on behalf of the process or asset owner will hinder not only the adoption of a strategic CARTA approach but business operations in general.

In summary, an integrated platform that provides visibility and correlation across solutions and a continuous process of user/device/account risk assessment is only a matter of time and technology improvement. The real challenge is CARTA’s organizational foundation – the shift to data-driven decision-making in the hands of business units and product owners, and the risk-conscious mindset is organizational. Safety and risk management must become a set of interwoven, continuous and adaptive processes. Working with business units, we define acceptable levels of trust and risk that translate into security policy guidelines.

These policies and acceptable risk levels flow through our security infrastructure, changing:

- How we create and acquire new IT services.

- How we evaluate and assess new partners in the digital business ecosystem (an increasing portion of which is under our control).

- How we protect and enable access to our systems and data as we execute processes.

Security decisions made should be constantly evaluated (not necessarily by a human) to ensure that the level of risk/trust is balanced to an acceptable level that the business and business unit owners – not IT – deem appropriate.